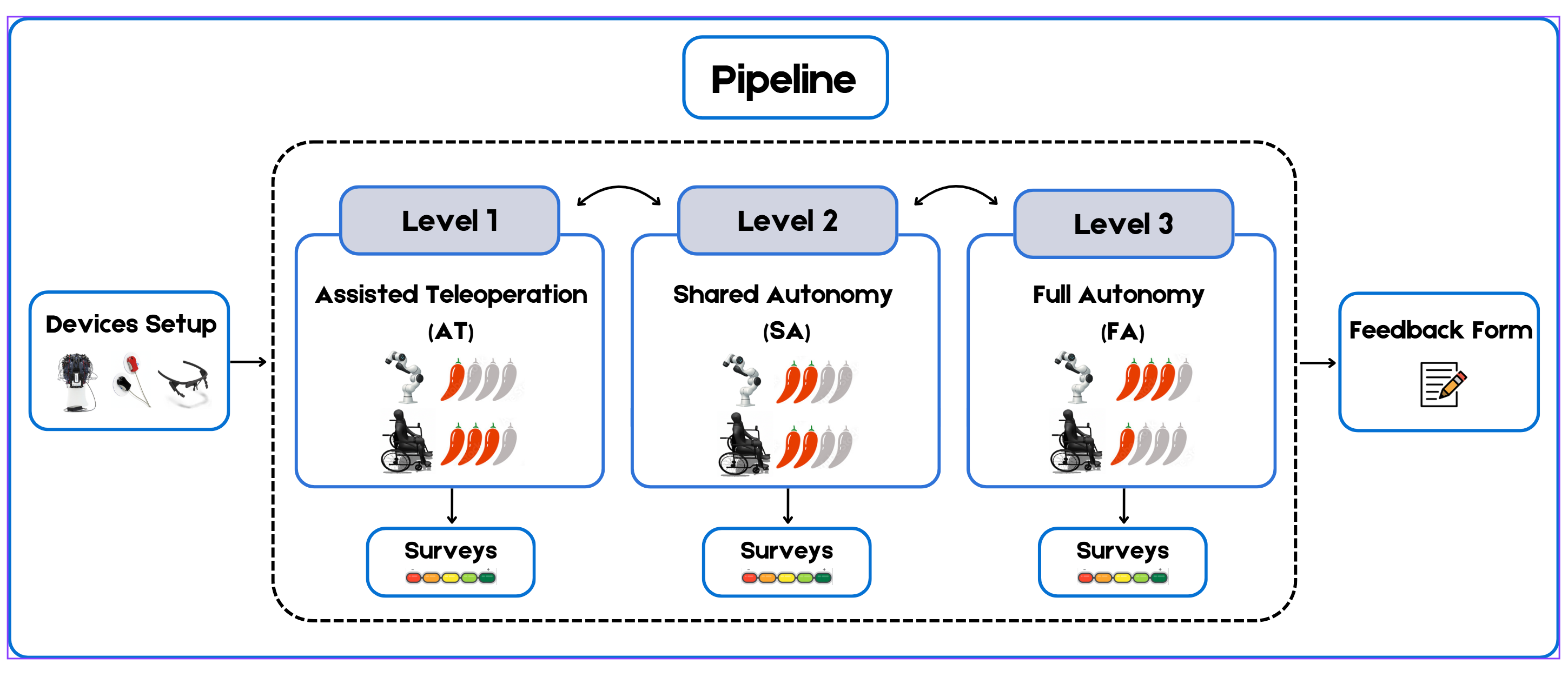

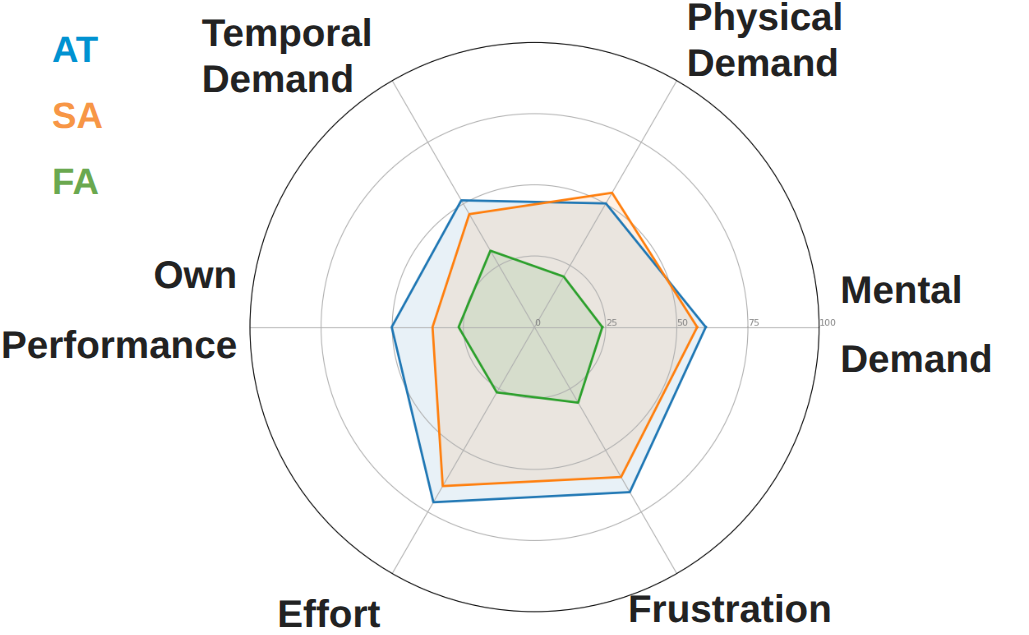

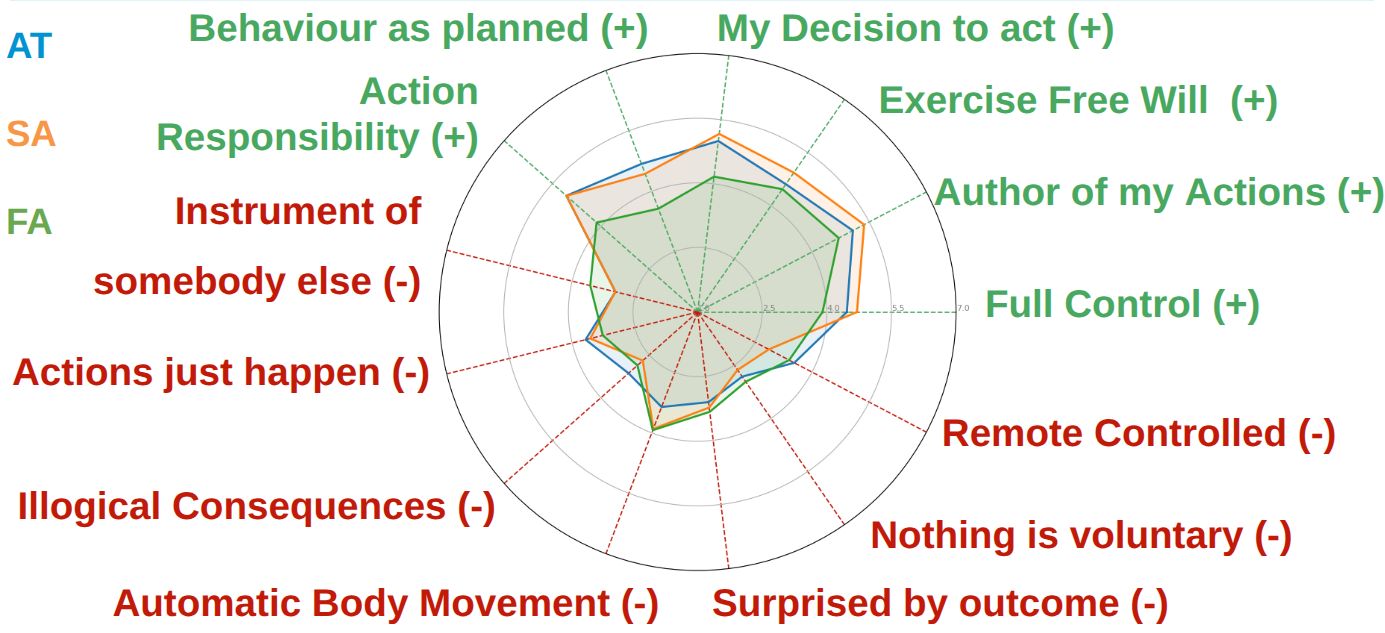

Assisted Teleoperation

The most demanding mode, requiring continuous attention and fine control. Participants felt strongly involved, but the effort and fatigue were noticeably higher.

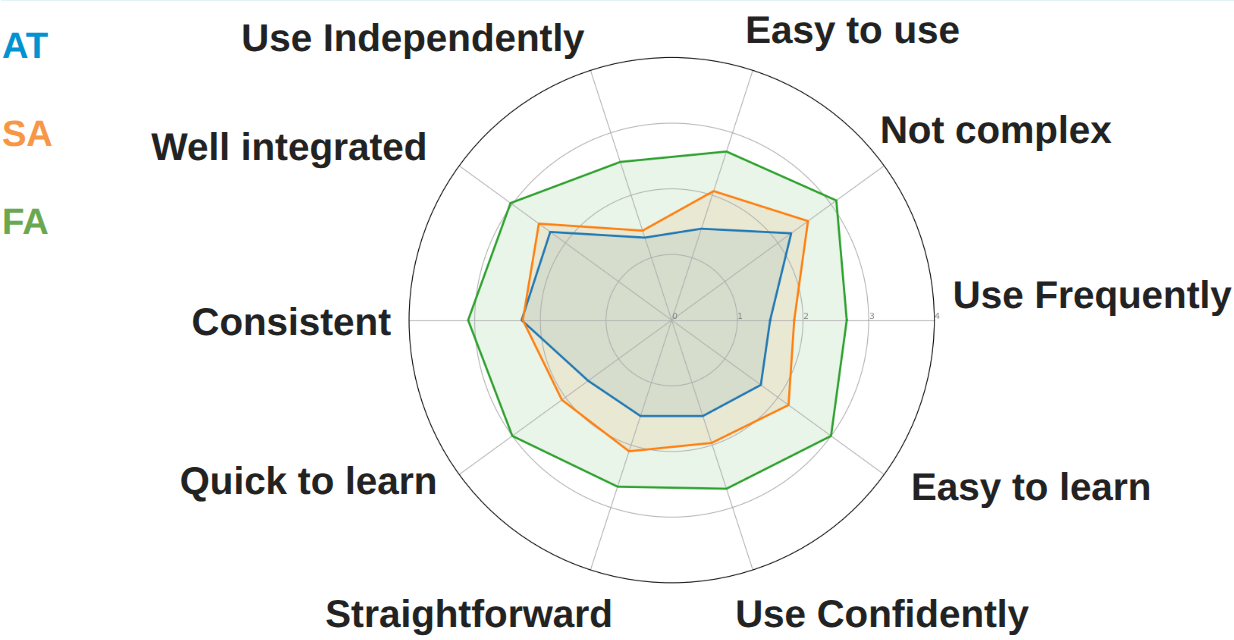

Shared Autonomy

The most balanced mode. Users remained in control while receiving meaningful assistance, resulting in smoother interaction, lower workload, and a natural sense of agency.

Full Automation

The fastest and most efficient level. Workload was minimal, though participants reported reduced direct control as the robot handled most of the task independently.

General Findings

Higher autonomy improved performance and reduced workload, while lower autonomy increased engagement and sense of control. Overall, Shared Autonomy emerged as the most appreciated balance between effort and efficiency.